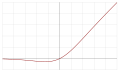

Tensor with the same shape and dtype as x. The SiLU was later rediscovered in 2017 as the Sigmoid-weighted Linear Unit. For 1, the function becomes equivalent to the Sigmoid Linear Unit 2 or SiLU, first proposed alongside the GELU in 2016. Code: model.add (Dense (64, activation 'relu')) model. Integer, axis along which the softmax normalization is appliedĪctivations functions can either be used through layer_activation(), or through the activation argument supported by all forward layers.Īctivation_selu() to be used together with the initialization “lecun_normal”.Īctivation_selu() to be used together with the dropout variant “AlphaDropout”.Īctivation_swish(): Searching for Activation FunctionsĪctivation_gelu(): Gaussian Error Linear Units (GELUs)Īctivation_selu(): Self-Normalizing Neural NetworksĪctivation_elu(): Fast and Accurate Deep Network Learning by Exponential Linear Units (ELUs) The swish function is a mathematical function defined as follows: where is either constant or a trainable parameter depending on the model. Implementation of Swish activation function in keras: Swish is implemented as a custom function in Keras, which after defining has to be registered with a key in the Activation Class.

Their experiments show that Swish tends to. from keras import backend as K from import getcustomobjects from keras.layers import Activation class Swish (Activation): def init (self, activation, beta, kwargs): super (Swish, self).init (activation, kwargs) self.name 'swish' self.beta beta def swish (x): return (K.sigmoid (betax) x). Threshold value for thresholded activation. So Google Brain Team has proposed a new activation function, named Swish, which is simply f(x) x sigmoid(x). Activation_relu(x, alpha = 0, max_value = NULL, threshold = 0) activation_elu(x, alpha = 1) activation_selu(x) activation_hard_sigmoid(x) activation_linear(x) activation_sigmoid(x) activation_softmax(x, axis = - 1) activation_softplus(x) activation_softsign(x) activation_tanh(x) activation_exponential(x) activation_gelu(x, approximate = FALSE) activation_swish(x) Arguments Arguments

0 kommentar(er)

0 kommentar(er)